原创文章,转载请指明出处并保留原文url地址

本文主要针对nginx的ngx_http_upstream_module模块做简单介绍,本文具体包括如下指令:upstream,server,ip_hash,keepalive,least_conn

ngx_http_upstream_module模块定义一组服务器,这个组服务器可以从proxy_pass,fastcgi_pass和memcached_pass指令中访问。

Example Configuration

upstream backend {

server backend1.example.com weight=5;

server backend2.example.com:8080;

server unix:/tmp/backend3;

server backup1.example.com:8080 backup;

server backup2.example.com:8080 backup;

}

server {

location / {

proxy_pass http://backend;

}

}

Nginx原文:

The ngx_http_upstream_module module allows to define groups of servers that can be referenced from the proxy_pass, fastcgi_pass, and memcached_pass directives.

Example Configuration

upstream backend {

server backend1.example.com weight=5;

server backend2.example.com:8080;

server unix:/tmp/backend3;

server backup1.example.com:8080 backup;

server backup2.example.com:8080 backup;

}

server {

location / {

proxy_pass http://backend;

}

}

1. upstream

| syntax: | upstream name{ ... } |

| default: | — |

| context: | http |

定义一组servers。这些服务器能监听不同的端口。另外服务器可以监听在tcp或者unix-doming端口, 也可以混合。例如:

Example:

upstream backend {

server backend1.example.com weight=5;

server 127.0.0.1:8080 max_fails=3 fail_timeout=30s;

server unix:/tmp/backend3;

}

默认情况下,请求使用加权的办法轮流均衡的分配到服务器上。在上面例子中,每7个请求中有5个请求分发到backend1.example.com服务器,第二个及第三个服务器各一个请求。如果在通信过程中发生错误,请求被传递到下一个服务器中,如此进行下去,直到全部服务器都被测试一遍后,如果这个时候仍然不能获取任何正确的相应,客户端将会获取到最后一个服务器那里获取到的结果。

Nginx原文:

Defines a group of servers. Servers can listen on different ports. In addition, servers listening on TCP and UNIX-domain sockets can be mixed.

Example:

upstream backend {

server backend1.example.com weight=5;

server 127.0.0.1:8080 max_fails=3 fail_timeout=30s;

server unix:/tmp/backend3;

}

By default, requests are distributed between servers using a weighted round-robin balancing method. In the above example, each 7 requests will be distributed as follows: 5 requests to backend1.example.com and one request to each of second and third servers. If an error occurs when communicating with the server, a request will be passed to the next server, and so on until all of the functioning servers will be tried. If a successful response could not be obtained from any of the servers, the client will be returned the result of contacting the last server.

2. server

| syntax: | server address[parameters]; |

| default: | — |

| context: | upstream |

定义服务器的地址和其它参数。地址可以指定为域名或IP地址,一个可选的端口,或作为一个UNIX域套接字路径后的“UNIX指定:”前缀。如果没有指定端口,80端口被默认使用。有多个ip地址域名定义为多个服务器。

下面的参数能够使用

weight=number

设置一个server的权重, 默认是1

max_fails=number

设置nginx同server之间通信的不成功访问次数的参数,参数通过fail_timeout参数进行设置, 在一定时间内,当不成功测试达到这个次数时,将考虑服务器down(下线, 宕机)。默认情况下不成功访问次数是1次。特殊值0将禁止统计这个访问的企图次数。什么是不成功的访问, 这个是通过 proxy_next_upstream, fastcgi_next_upstream, and memcached_next_upstream 指令进行配置的。http404状态不被考虑为不成功的尝试。

fail_timeout=time

sets

一时间值,在这个时间值下不能与服务器进行通信被认为是尝试失败,在与服务器通信不成功的尝试次数到达后,服务器被认为是宕机;

默认情况下, 超时时间是10秒钟

Backup

标记服务器作为备份服务器。通常当主服务器宕机后传递请求给备份服务器

Down

标志着服务器永久宕机;随着ip_hash指令使用。

示例:

upstream backend {

server backend1.example.com weight=5;

server 127.0.0.1:8080 max_fails=3 fail_timeout=30s;

server unix:/tmp/backend3;

server backup1.example.com:8080 backup;

}

Nginx原文:

Defines an address and other parameters of the server. An address can be specified as a domain name or IP address, and an optional port, or as a UNIX-domain socket path specified after the “unix:” prefix. If port is not specified, the port 80 is used. A domain name that resolves to several IP addresses essentially defines multiple servers.

The following parameters can be defined:

weight=number

sets a weight of the server, by default 1.

max_fails=number

sets a number of unsuccessful attempts to communicate with the server during a time set by the fail_timeout parameter after which it will be considered down for a period of time also set by the fail_timeout parameter. By default, the number of unsuccessful attempts is set to 1. A value of zero disables accounting of attempts. What is considered to be an unsuccessful attempt is configured by the proxy_next_upstream, fastcgi_next_upstream, and memcached_next_upstream directives. The http_404 state is not considered an unsuccessful attempt.

fail_timeout=time

sets

a time during which the specified number of unsuccessful attempts to communicate with the server should happen for the server to be considered down;

and a period of time the server will be considered down.

By default, timeout is set to 10 seconds.

backup

marks the server as a backup server. It will be passed requests when the primary servers are down.

down

marks the server as permanently down; used along with the ip_hash directive.

Example:

upstream backend {

server backend1.example.com weight=5;

server 127.0.0.1:8080 max_fails=3 fail_timeout=30s;

server unix:/tmp/backend3;

server backup1.example.com:8080 backup;

}

3. ip_hash

| syntax: | ip_hash; |

| default: | — |

| context: | upstream |

制定一组服务器应该采用负载均衡的方法,在这个方法中用户请求基于用户的ip地址被分发到不同的服务器上。对于IPv4的前3个字节或者整个IPv6地址被作为hashing的key值。这个方法试题将相同客户端的请求分发到相同的服务器上,出发这个服务器宕机了,才考虑将请求分发到其他服务器上。

IPv6的地址是由版本1.3.2和1.2.2开始支持

如果一个服务器需要暂时删除,并要用down参数保存当前服务器参与到散列客户端IP地址中。

Example:

upstream backend {

ip_hash;

server backend1.example.com;

server backend2.example.com;

server backend3.example.com down;

server backend4.example.com;

}

直到版本1.3.1和1.2.2不能使用ip_hash中采用权重的方法来做负载均衡。

Nginx原文:

Specifies that a group should use a load balancing method where requests are distributed between servers based on client IP addresses. The first three octets of the client IPv4 address, or the entire IPv6 address, are used as a hashing key. The method ensures that requests of the same client will always be passed to the same server except when this server is considered down in which case client requests will be passed to another server and most probably it will also be the same server.

IPv6 addresses are supported starting from versions 1.3.2 and 1.2.2.

If one of the servers needs to be temporarily removed, it should be marked with the down parameter in order to preserve the current hashing of client IP addresses.

Example:

upstream backend {

ip_hash;

server backend1.example.com;

server backend2.example.com;

server backend3.example.com down;

server backend4.example.com;

}

Until versions 1.3.1 and 1.2.2 it was not possible to specify a weight for servers using the ip_hash load balancing method.

4. keepalive

| syntax: | keepalive connections; |

| default: | — |

| context: | upstream |

This directive appeared in version 1.1.4.

激活连接到上游服务器缓存。(upstream adj. 向上游的;(石油工业等)上游的;逆流而上的;)

连接参数设置空闲的保持连接的最大连接数目, 当连接数码超过最大值, 这些保存在每个工作者进程的缓存中的到上游缓存的连接将被释放, 释放时选择最近没有使用的连接进行释放。

需要特别指出的是keepalive指令并不限制工作者进程连接到上游服务器的连接总数。连接参数应该设置的足够低,来确保上游服务器有足够的剩余连接来接收新连接请求。

连接到memcached上游服务器的配置示例

upstream memcached_backend {

server 127.0.0.1:11211;

server 10.0.0.2:11211;

keepalive 32;

}

server {

...

location /memcached/ {

set $memcached_key $uri;

memcached_pass memcached_backend;

}

}

对于http协议, 这proxy_http_version指令应该被设置到1.1并且Connection头部域应该被清除。

upstream http_backend {

server 127.0.0.1:8080;

keepalive 16;

}

server {

...

location /http/ {

proxy_pass http://http_backend;

proxy_http_version 1.1;

proxy_set_header Connection "";

...

}

}

另外,HTTP/1.0持久连接可以通过“Connection: Keep-Alive”头部域保持同上游服务器的长连接,尽管这个方法不被推荐采用。

对于FastCGI服务器,要求设置set fastcgi_keep_conn为keepalive连接。

upstream fastcgi_backend {

server 127.0.0.1:9000;

keepalive 8;

}

server {

...

location /fastcgi/ {

fastcgi_pass fastcgi_backend;

fastcgi_keep_conn on;

...

}

}

当使用轮训以外的负载均衡方法是必须在keepalive指令前激活相应负载均衡功能

SCGI and uwsgi协议没有保持连接的概念

Nginx原文:

Activates cache of connections to upstream servers.

The connections parameter sets the maximum number of idle keepalive connections to upstream servers that are retained in the cache per one worker process. When this number is exceeded, the least recently used connections are closed.

It should be particularly noted that keepalive directive does not limit the total number of connections that nginx worker process can open to upstream servers. The connections parameter should be set low enough to allow upstream servers to process additional new incoming connections as well.

Example configuration of memcached upstream with keepalive connections:

upstream memcached_backend {

server 127.0.0.1:11211;

server 10.0.0.2:11211;

keepalive 32;

}

server {

...

location /memcached/ {

set $memcached_key $uri;

memcached_pass memcached_backend;

}

}

For HTTP, the proxy_http_version directive should be set to “1.1” and the “Connection” header field should be cleared:

upstream http_backend {

server 127.0.0.1:8080;

keepalive 16;

}

server {

...

location /http/ {

proxy_pass http://http_backend;

proxy_http_version 1.1;

proxy_set_header Connection "";

...

}

}

Alternatively, HTTP/1.0 persistent connections can be used by passing the “Connection: Keep-Alive” header field to an upstream server, though this is not recommended.

For FastCGI servers, it is required to set fastcgi_keep_conn for keepalive connections to work:

upstream fastcgi_backend {

server 127.0.0.1:9000;

keepalive 8;

}

server {

...

location /fastcgi/ {

fastcgi_pass fastcgi_backend;

fastcgi_keep_conn on;

...

}

}

When using load balancer methods other than the default round-robin, it is necessary to activate them before the keepalive directive.

SCGI and uwsgi protocols do not have a notion of keepalive connections.

5. least_conn

| syntax: | least_conn; |

| default: | — |

| context: | upstream |

This directive appeared in versions 1.3.1 and 1.2.2.

制定一组服务器应该尽可能使用负载均衡的办法,在考虑服务器权重的情况下, 即一个请求尽可能传递给具有最少活动连接的服务器。如果有多个这种服务器应该使用轮训的方式。

Nginx原文:

Specifies that a group should use a load balancing method where a request is passed to the server with the least number of active connections, taking into account weights of servers. If there are several such servers, they are tried using a weighted round-robin balancing method.

内嵌变量

Embedded Variables

The ngx_http_upstream_module module supports the following embedded variables:

$upstream_addr

保存服务器的ip地址和端口,或者UNIX-domain socket的path。如果多个服务器被联系,在请求处理过程中,他们的地址被逗号“,”分割,例如“192.168.1.1:80, 192.168.1.2:80, unix:/tmp/sock”,如果内部从定向从一个server组到另外一个服务器(通过使用“X-Accel-Redirect”或者error_page 指令), 然后这些server组被分割“192.168.1.1:80, 192.168.1.2:80, unix:/tmp/sock : 192.168.10.1:80, 192.168.10.2:80”

keeps an IP address and port of the server, or a path to the UNIX-domain socket. If several servers were contacted during request processing, their addresses are separated by commas, e.g. “192.168.1.1:80, 192.168.1.2:80, unix:/tmp/sock”. If an internal redirect from one server group to another happened using “X-Accel-Redirect” or error_page then these server groups are separated by colons, e.g. “192.168.1.1:80, 192.168.1.2:80, unix:/tmp/sock : 192.168.10.1:80, 192.168.10.2:80”.

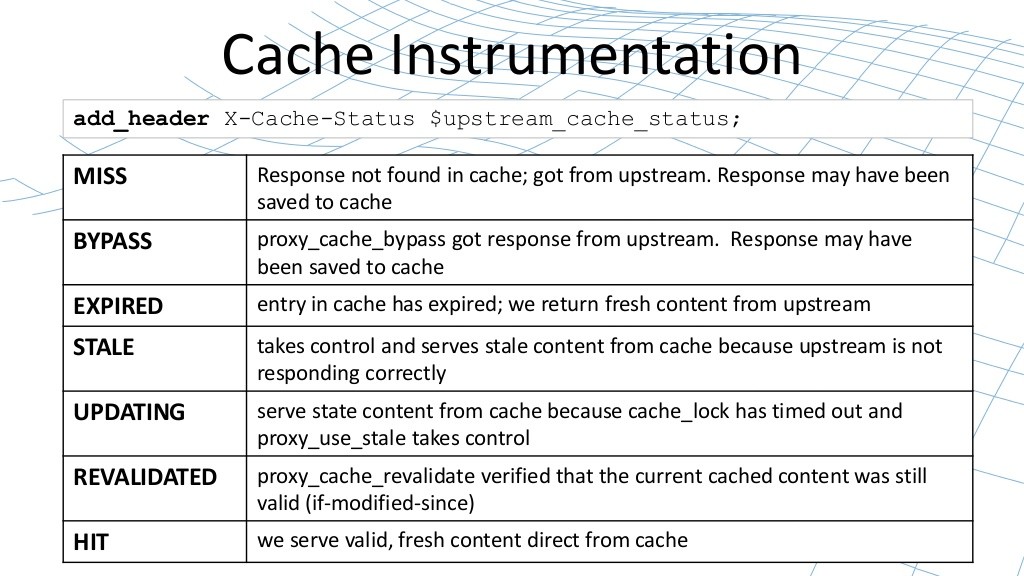

$upstream_cache_status

保持状态的访问响应缓存(0.8.3)。状态可以是一个“小姐”,“搭桥”,“过期”,“过时”,“更新”或“打”

保持一个存取相应缓存的状态, 这些状态能是下面:“MISS”, “BYPASS”, “EXPIRED”, “STALE”, “UPDATING” or “HIT”。

keeps status of accessing a response cache (0.8.3). The status can be one of “MISS”, “BYPASS”, “EXPIRED”, “STALE”, “UPDATING” or “HIT”.

$upstream_response_length

保持从上游获取获取相应的长度, 长度单位是自己, 多个相应的长度别逗号分隔,像$upstream_addr变量那样。

keeps lengths of responses obtained from upstream servers (0.7.27); lengths are kept in bytes. Several responses are separated by commas and colons like in the $upstream_addr variable.

$upstream_response_time

保持从上游服务器获取相应的时间, 时间以毫秒为单位被保持。多个相应用逗号分隔。

keeps times of responses obtained from upstream servers; times are kept in seconds with a milliseconds resolution. Several responses are separated by commas and colons like in the $upstream_addr variable.

$upstream_status

保持相应代码从上游服务器获取的。多个相应的代码用逗号分隔。

keeps codes of responses obtained from upstream servers. Several responses are separated by commas and colons like in the $upstream_addr variable.

$upstream_http_...

保持相应头域,例如相应头“Server”域可以通过$upstream_http_server变量获取。转换头域名称为相同变量并且用“http_”开通,仅最后的服务器的响应头被保持。

keep server response header fields. For example, the “Server” response header field is made available through the $upstream_http_server variable. The rules of converting header field names to variable names are the same as for variables starting with the “$http_” prefix. Only the last server’s response header fields are saved.