内容总结自互联网资料

1.如果要对某Field进行查找,那么一定要把Field.Index设置为TOKENIZED或UN_TOKENIZED。TOKENIZED会对Field的内容进行分词;而UN_TOKENIZED不会,只有全词匹配,该Field才会被选中。

2.如果Field.Store是No,那么就无法在搜索结果中从索引数据直接提取该域的值,会使null。

补充:

Field.Store.YES:存储字段值(未分词前的字段值)

Field.Store.NO:不存储,存储与索引没有关系

Field.Store.COMPRESS:压缩存储,用于长文本或二进制,但性能受损

Field.Index.ANALYZED:分词建索引

Field.Index.ANALYZED_NO_NORMS:分词建索引,但是Field的值不像通常那样被保存,而是只取一个byte,这样节约存储空间

Field.Index.NOT_ANALYZED:不分词且索引

Field.Index.NOT_ANALYZED_NO_NORMS:不分词建索引,Field的值去一个byte保存

3. TermVector

TermVector表示文档的条目(由一个Document和Field定位)和它们在当前文档中所出现的次数

Field.TermVector.YES:为每个文档(Document)存储该字段的TermVector

Field.TermVector.NO:不存储TermVector

Field.TermVector.WITH_POSITIONS:存储位置

Field.TermVector.WITH_OFFSETS:存储偏移量

Field.TermVector.WITH_POSITIONS_OFFSETS:存储位置和偏移量

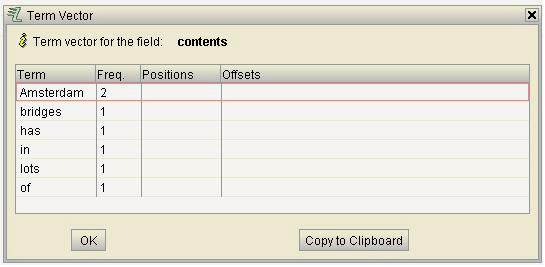

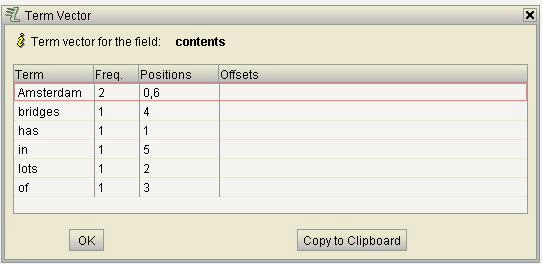

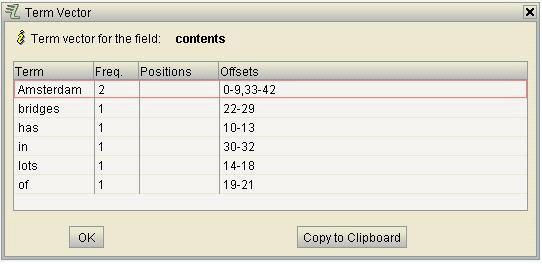

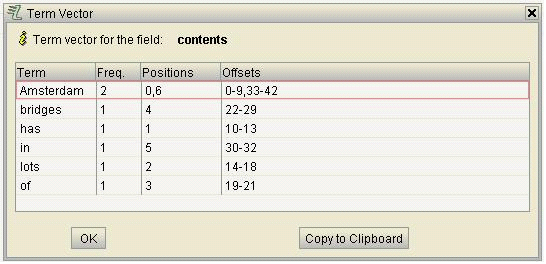

4. 图解lucene TermVector

如果不是Field.Store.YES, 无法保存TermVector.

索引数据为Amsterdam has lots of bridges in Amsterdam

WhitespaceAnalyzer

4.1 TermVector.YES

4.2 TermVector.WITH_POSITIONS

4.3 TermVector.WITH_OFFSETS

4.4 TermVector.WITH_POSITIONS_OFFSETS

5. Lucene TermVector用法:相关搜索功能及提高高亮显示性能

public class TermVectorTest {

1

2 Analyzer analyzer = new SimpleAnalyzer();

3 Directory ramDir = new RAMDirectory();

4

5 public void createRamIndex() throws CorruptIndexException, LockObtainFailedException, IOException{

6

7 IndexWriter writer = new IndexWriter(ramDir,analyzer,IndexWriter.MaxFieldLength.LIMITED);

8

9 Document doc1 = new Document();

10 doc1.add(new Field("title","java",Store.YES,Index.ANALYZED));

11 doc1.add(new Field("author","callan",Store.YES,Index.ANALYZED));

12 doc1.add(new Field("subject","java一门编程语言,用java的人很多,编程语言也不少,但是java最流行",Store.YES,Index.ANALYZED,TermVector.WITH_POSITIONS_OFFSETS));

13

14 Document doc2 = new Document();

15 doc2.add(new Field("title","english",Store.YES,Index.ANALYZED));

16 doc2.add(new Field("author","wcq",Store.YES,Index.ANALYZED));

17 doc2.add(new Field("subject","英语用的人很多",Store.YES,Index.ANALYZED,TermVector.WITH_POSITIONS_OFFSETS));

18

19 Document doc3 = new Document();

20 doc3.add(new Field("title","asp",Store.YES,Index.ANALYZED));

21 doc3.add(new Field("author","ca",Store.YES,Index.ANALYZED));

22 doc3.add(new Field("subject","英语用的人很多",Store.YES,Index.ANALYZED,TermVector.WITH_POSITIONS_OFFSETS));

23

24 writer.addDocument(doc1);

25 writer.addDocument(doc2);

26 writer.addDocument(doc3);

27

28 writer.optimize();

29 writer.close();

30 }

31

32 public void search() throws CorruptIndexException, IOException{

33 IndexReader reader = IndexReader.open(ramDir);

34 IndexSearcher searcher = new IndexSearcher(reader);

35 Term term = new Term("title","java"); //在title里查询java词条

36 TermQuery query = new TermQuery(term);

37 Hits hits = searcher.search(query);

38 for (int i = 0; i < hits.length(); i++)

39 {

40 Document doc = hits.doc(i);

41 System.out.println(doc.get("title"));

42 System.out.println(doc.get("subject"));

43 System.out.println("moreLike search: ");

44

45 morelikeSearch(reader,hits.id(i));

46 }

47 }

48

49 private void morelikeSearch(IndexReader reader,int id) throws IOException

50 {

51 //根据这个document的id获取这个field的Term Vector 信息,就是这个field分词之后在这个field里的频率、位置、等信息

52 TermFreqVector vector = reader.getTermFreqVector(id, "subject");

53

54 BooleanQuery query = new BooleanQuery();

55

56 for (int i = 0; i < vector.size(); i++)

57 {

58 TermQuery tq = new TermQuery(new Term("subject",

59 vector.getTerms()[i])); //获取每个term保存的Token

61 query.add(tq, BooleanClause.Occur.SHOULD);

63 }

65 IndexSearcher searcher = new IndexSearcher(ramDir);

67 Hits hits = searcher.search(query);

69 //显示代码,略

72 }

73

74 //Lucene使用TermVector提高高亮显示性能

75 public void highterLightSearch() throws CorruptIndexException, IOException{

76 IndexReader reader = IndexReader.open(ramDir);

78 IndexSearcher searcher = new IndexSearcher(reader);

80 TermQuery query = new TermQuery(new Term("subject","java"));

82 Hits hits = searcher.search(query);

84 //高亮显示设置

85 SimpleHTMLFormatter simpleHTMLFormatter = new SimpleHTMLFormatter("<font color='red'>","</font>");

87 Highlighter highlighter =new Highlighter(simpleHTMLFormatter,new QueryScorer(query));

89 // 这个100是指定关键字字符串的context的长度,你可以自己设定,因为不可能返回整篇正文内容

90 highlighter.setTextFragmenter(new SimpleFragmenter(100));

92 for(int i = 0; i < hits.length(); i++){

94 Document doc = hits.doc(i);

96 TermPositionVector termFreqVector = (TermPositionVector)reader.getTermFreqVector(hits.id(i), "subject");

98 TermFreqVector vector = reader.getTermFreqVector(hits.id(i), "subject");

99 TokenStream tokenStream = TokenSources.getTokenStream(termFreqVector);

101 String result = highlighter.getBestFragment(tokenStream, doc.get("subject"));

103 System.out.println(doc.get("title"));

105 System.out.println(result);

107 }

110 }

112 public static void main(String[] args) throws CorruptIndexException, IOException

113 {

114 TermVectorTest t = new TermVectorTest();

115 t.createRamIndex();

116 t.search();

117 }

119 }